Written by Data Detective

“Civilization advances by extending the number of important operations which we can perform without thinking about them.” — Alfred North Whitehead

If you’re anything like me, the term data engineering might’ve sounded boring at first. Like some grey-suited person automating spreadsheets in a dark corner of the internet. I mean, who gets excited by hearing the word ‘pipeline’?

Turns out, a lot of people do. Because once you look under the hood, data engineering is the quiet powerhouse that makes everything from your Spotify Wrapped, to fraud detection, to ChatGPT, actually work. It’s like plumbing. If it breaks, everything else floods.

So in this blog, I want to map out what data engineers really do, how their work shows up in the apps and decisions around us, and why it’s way more exciting than just loading CSVs into a database (though yes, sometimes we do that too).

So… What Even Is Data Engineering?

Let’s start simple.

Data engineering is about moving, cleaning, and organizing data so that others (analysts, ML models, dashboards, decision-makers, your future robot overlords) can use it to do cool stuff.

But don’t let that fool you. That deceptively short sentence hides an entire universe of work, from streaming billions of records in real time to building bulletproof pipelines that don’t collapse every time someone uploads a weird Excel file.

The Data Engineering Lifecycle

Here’s the basic lifecycle of a data engineer’s job, a journey that begins with messy chaos and ends with beautifully structured, queryable magic:

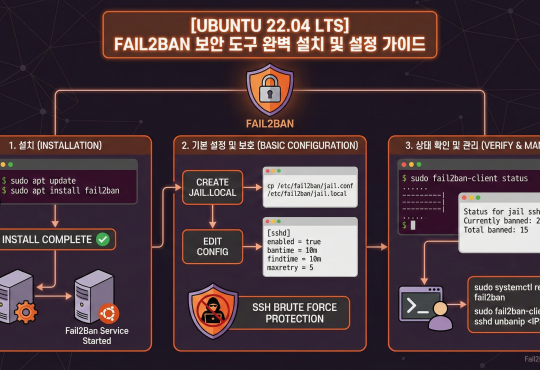

1. Ingest

Raw data resides in different sources such as APIs, databases, logs, files, sensors, toasters, etc. and we have to pull them all into a centralized repository of data. The pulls could be made in batch (intervals of few to many hours) or streaming (real-time). Some of the tools used to ingest are Airbyte, Kafka or custom Python scripts with way too many ‘try/except’s.

2. Clean & Transform

The data we get may not be in the best format. So, we need to clean it and transform it. The process consists of fixing typos, unifying formats, converting units, handling missing values, merging duplicates and anonymizing stuff. This is where bad data goes to be reborn. Some of the tools used for this process are Python, dbt, Spark and a lot of SQL sorcery.

3. Store

After cleaning data from disparate sources, we send it to a place where it can chill safely, usually a data warehouse like BigQuery or Snowflake, or a data lake like S3/GCS.

4. Serve

The data is finally ready to be mined so we have to make it accessible to other teams or apps who can do that. This could mean passing it to APIs, analytics dashboards, or machine learning models. Because if the data just sits there, did it even exist?

Wait, Isn’t That What Data Scientists Do?

Let’s clear up the confusion. These are some other data people as well but they have different priorities.

Data Engineer: “How do I move and structure this data?”

Data Scientist: “What can I predict or explain with this data?”

Data Analyst: “What’s happening in the data?”

Of course, in smaller teams, these roles blur. But trust me, nobody wants their ML model failing because the timestamp column had emojis.